This is a short tutorial that shows you how to authenticate your users at the edge without hitting your servers at all.

tl;dr

Lambda functions that are deployed at the edge locations of Cloudfront are authenticating your users by validating JWT tokens and returning its claims.

"An abstract painting of a person standing on the edge of the world" - DALL-E

Table of contents

Open Table of contents

0. Motivation

When you are building a client application (e.g. web, mobile, desktop) you are very likely having a component that renders information about what user is currently logged in to your application.

![]()

It is convenient to have an endpoint available where you just send a valid access token to and it returns information about the user that you would like to display. That end point can look like https://auth.example.com/user or https://auth.example.com/whoami. In this tutorial we are going to build a https://whoami.example.com end point.

This will only work if any user information that we want to display is already included in the access token itself. Why would you want to send an access token anywhere if you already have access to the information you need?

- Accessing information of the token requires knowledge about how to validate and decode the token as well as shipping additionally modules or libraries to the client that are needed for that. Calling an end point only requires a http client that every client has access to natively.

- If you have multiple clients (e.g. web, mobile, desktop) you do not want to duplicate the logic of validating and decoding across all clients. An end point provides a central location of that logic.

Calling an additional end point to receive user information adds latency to the overall experience of your users. But this tutorial is all about minimizing the latency to two-digit milliseconds. If your /whoami end point is still your latency bottleneck of your application, then congratulation, you have a blazing fast application.

1. Prerequisites

- AWS SAM CLI installed

- JWT encryption with JWKS

- JWKS endpoint is edge optimized (Check out our blog post)

2. Lambda function

2.1. Coding

Lambda@Edge supports Typescript and Python as runtimes. We pick Typescript for this tutorial. The code is loosely based on instructions from Supertokens.

We hardcode our JWKS end point https://auth.example.com/jwt/jwks.json. You would normally inject urls like that as environment variables. But since we are planning to deploy this function as a Lambda@Edge function we cannot use environment variables, because at the edge there is no environment.

./src/index.ts

import { CloudFrontRequestHandler, CloudFrontRequestEventRecord } from "aws-lambda";

import JsonWebToken, { JwtHeader, SigningKeyCallback } from "jsonwebtoken";

import jwksClient from "jwks-rsa";

const getToken = (record: CloudFrontRequestEventRecord): string => {

const authorization = record.cf.request.headers.authorization[0]?.value;

if (authorization) {

const [, token] = authorization.split(" ");

return token;

} else {

throw new Error("No token found in Authorization header")

}

}

const verifyToken = async (token: string): Promise<string | JsonWebToken.JwtPayload> => new Promise((resolve, reject) => {

const client = jwksClient({

jwksUri: 'https://auth.example.com/jwt/jwks.json'

});

const getKey = (header: JwtHeader, callback: SigningKeyCallback): void => client.getSigningKey(header.kid, (err, key) => callback(err, key!.getPublicKey()))

JsonWebToken.verify(token, getKey, {}, (err, decoded) => {

if (err || !decoded) {

return reject("No valid token");

}

return resolve(decoded);

});

});

const handler: CloudFrontRequestHandler = async (event) => {

try {

const verifiedToken = await verifyToken(getToken(event.Records[0]));

return {

status: "200",

body: JSON.stringify(verifiedToken),

headers: {

"content-type": [{

key: "Content-type",

value: "application/json"

}]

}

}

} catch (error) {

return {

status: "401",

message: error.message

}

}

}

export default handler;2.2. Building

Lambda@Edge function cannot use $LATEST, but must have a numbered version (Docs). Luckily AWS SAM creates new versions automatically if AutoPublishAlias is set to true.

The lambda execution role needs lambda and edgelambda as trusted entities so that it can be called from the edge (Docs).

The stack outputs the ARN of our lambda function with its updated version number. That output later serves as input parameter when creating our Cloudfront distribution.

api.yaml

AWSTemplateFormatVersion: "2010-09-09"

Transform: AWS::Serverless-2016-10-31

Description: Whoami api

Resources:

WhoamiFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: dist

Handler: index.default

Runtime: nodejs16.x

Timeout: 3

AutoPublishAlias: EdgeFunction

Role: !GetAtt WhoamiFunctionRole.Arn

WhoamiFunctionRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

- edgelambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Outputs:

WhoamiApi:

Value: !Ref WhoamiFunction.VersionWe bundle our Typescript code with esbuild. AWS SAM normally does a great job in doing that for us, but for two reasons we cannot rely on SAM for this tutorial:

- AWS SAM does not minify our code before zipping and uploading it to S3. That results in a ZIP file of 1.7 MB. The maximum size of a lambda function for a viewer request is 1MB.

- AWS SAM does not properly bundle the

node_modulesfolder when a stack is not built fromtemplate.yaml, but from a different file likeapi.yamlin our case.

package.json

{

"name": "whoami-api",

"version": "1.0.0",

"main": "index.js",

"scripts": {

"build": "esbuild ./src/index.ts --bundle --outdir=dist --platform=node --minify"

},

"devDependencies": {

"@types/aws-lambda": "^8.10.110",

"@types/jsonwebtoken": "^9.0.1",

"esbuild": "^0.17.7"

},

"dependencies": {

"jsonwebtoken": "^9.0.0",

"jwks-rsa": "^3.0.1"

}

}When we want to build our project we have to run esbuild before AWS SAM. It might look something like this if you are using pnpm:

pnpm build && sam build2.3. Testing

Another nice feature of AWS SAM is that you can test your function locally before deploying it. In order to test our lambda function we have to create a mock event that looks like an event that it would receive from Cloudfront:

mock-event.json

{

"Records": [

{

"cf": {

"request": {

"headers": {

"authorization": [

{

"key": "Authorization",

"value": "Bearer 123456"

}

]

}

}

}

}

]

}Run the following command to test our function:

sam local invoke -t api.yaml -e request.json WhoamiFunctionIf you have used exactly the same mock event your function should return a 401 error. If you replace the token with an actual, valid access token, your function should return that payload of the access token.

2.4. Deploying

If your tests were successful we can deploy our function using AWS SAM CLI. In other tutorials you might see the use of samconfig.toml. Since we are using two different template files (api.yaml and infrastructure.yaml) for this tutorial we pass all parameters as command line options instead:

sam deploy --stack-name whoami-api --s3-bucket your_bucket --s3-prefix api --region us-east-1 --capabilities CAPABILITY_IAMReplace your_bucket with a S3 bucket name of your account

3. Lambda@Edge and Cloudfront

Our lambda function has no downstream dependencies to any of our other services. That makes it a perfect candidate for Lambda@Edge where we can execute lambda function closer to our users.

Lambda@Edge is a service from Cloudfront. Normally you need to specify an origin server that Cloudfront is forwarding the requests to. But we are deploying our lambda function as an edge function that intercepts viewer requests. No requests ever hit our origin server. Therefore we can configure a dummy origin as origin server.

The following AWS SAML template file describes in full detail what Cloudfront distribution we want to create. The important part is LambdaFunctionAssociations where we define our lambda function on the viewer-request. The ARN is referenced by a parameter. This is where we use the output of our api stack as an input.

We tried different ways of having our lambda function and Cloudfront distribution in the same template file, but all our tries failed for different reasons:

- We referenced the lambda ARN from the same template file and added a

DependsOnto the distribution. But versions are not immediately published once a lambda function is created. That caused the distribution to fail, because the referenced version number was not available. - We wanted to use

Exportand!ImportValueto connect two different Cloudformation stacks. But once a version number is used by the distribution we could not update theExportvalue of the api template anymore.

We use whoami.example.com as an alternate domain name and pass it as Aliases. If we want to use an alternate domain name, we also need to pass a certificate that includes that domain name. We have that certificate in our account and pass it as a parameter to the distribution

infrastructure.yaml

AWSTemplateFormatVersion: "2010-09-09"

Transform: AWS::Serverless-2016-10-31

Description: Whoami infrastructure

Parameters:

WhoamiEdgeFunctionArn:

Type: String

Description: Arn of whoami edge function including version

CertificateArn:

Type: String

Description: Arn of certificate for domain that is connected to Cloudfront

Resources:

CloudfrontDistribution:

Type: AWS::CloudFront::Distribution

Properties:

DistributionConfig:

Aliases:

- "whoami.example.com"

Comment: Whoami

DefaultCacheBehavior:

AllowedMethods:

- "HEAD"

- "GET"

- "OPTIONS"

CachedMethods:

- "HEAD"

- "GET"

CachePolicyId: "4135ea2d-6df8-44a3-9df3-4b5a84be39ad"

Compress: true

SmoothStreaming: false

TargetOriginId: "noorigin.com"

ViewerProtocolPolicy: "https-only"

LambdaFunctionAssociations:

- LambdaFunctionARN: !Ref WhoamiEdgeFunctionArn

EventType: "viewer-request"

IncludeBody: false

Enabled: true

HttpVersion: http2

IPV6Enabled: true

PriceClass: PriceClass_All

Staging: false

Origins:

- DomainName: "noorigin.com"

Id: "noorigin.com"

OriginPath: ""

CustomOriginConfig:

HTTPPort: 80

HTTPSPort: 443

OriginProtocolPolicy: "https-only"

OriginSSLProtocols:

- "TLSv1.2"

OriginReadTimeout: 30

OriginKeepaliveTimeout: 5

ViewerCertificate:

AcmCertificateArn: !Ref CertificateArn

SslSupportMethod: "sni-only"

MinimumProtocolVersion: "TLSv1.2_2021"Before we can deploy we need to build this project:

sam build -t infrastructure.yamlFor the deployment we also need to pass parameters:

sam deploy --stack-name whoami-infrastructure --s3-bucket your_bucket --s3-prefix infrastructure --region us-east-1 --capabilities CAPABILITY_IAM --parameter-overrides WhoamiEdgeFunctionArn=your_lambda_arn CertificateArn=certificate_arnNote: sam deploy deploys whatever is in the .aws-sam folder. Therefore in is crucial to not mix up the build commands for api.yaml and infrastructure.yaml. Always build the project first that you want to deploy.

Replace your_lambda_arn with the output value from your api template and certificate_arn with your certificate arn.

4. Setting up Route 53

We use Route 53 to create a https://whoami.example.com end point. This last step is very straight-forward, but it makes all the difference in the end. The HostedZoneId depends on your environment. Therefore we pass this is a parameter as well. You will need to update your deploy command from the previous step to also include that new parameter.

infrastructure.yaml

AWSTemplateFormatVersion: "2010-09-09"

Transform: AWS::Serverless-2016-10-31

Description: Whoami infrastructure

Parameters:

CertificateArn:

Type: String

Description: Arn of certificate for domain that is connected to Cloudfront

Default: "arn:aws:acm:us-east-1:292867823530:certificate/19b2d870-8af6-4b6d-93cb-1ddb92b7a8e2"

WhoamiEdgeFunctionArn:

Type: String

Description: Arn of whoami edge function including version

HostedZoneId:

Type: String

Description: Id of hosted zone for Route 53

Resources:

CloudfrontDistribution:

Type: AWS::CloudFront::Distribution

Properties:

DistributionConfig:

Aliases:

- "whoami.example.com"

Comment: Whoami

DefaultCacheBehavior:

AllowedMethods:

- "HEAD"

- "GET"

- "OPTIONS"

CachedMethods:

- "HEAD"

- "GET"

CachePolicyId: "4135ea2d-6df8-44a3-9df3-4b5a84be39ad"

Compress: true

SmoothStreaming: false

TargetOriginId: "noorigin.com"

ViewerProtocolPolicy: "https-only"

LambdaFunctionAssociations:

- LambdaFunctionARN: !Ref WhoamiEdgeFunctionArn

EventType: "viewer-request"

IncludeBody: false

Enabled: true

HttpVersion: http2

IPV6Enabled: true

PriceClass: PriceClass_All

Staging: false

Origins:

- DomainName: "noorigin.com"

Id: "noorigin.com"

OriginPath: ""

CustomOriginConfig:

HTTPPort: 80

HTTPSPort: 443

OriginProtocolPolicy: "https-only"

OriginSSLProtocols:

- "TLSv1.2"

OriginReadTimeout: 30

OriginKeepaliveTimeout: 5

ViewerCertificate:

AcmCertificateArn: !Ref CertificateArn

SslSupportMethod: "sni-only"

MinimumProtocolVersion: "TLSv1.2_2021"

RecordSet:

Type: AWS::Route53::RecordSet

Properties:

Name: "whoami.example.com."

Type: A

AliasTarget:

DNSName: !GetAtt ["CloudfrontDistribution", "DomainName"]

HostedZoneId: Z2FDTNDATAQYW2

EvaluateTargetHealth: false

HostedZoneId: !Ref HostedZoneId5. Conclusion

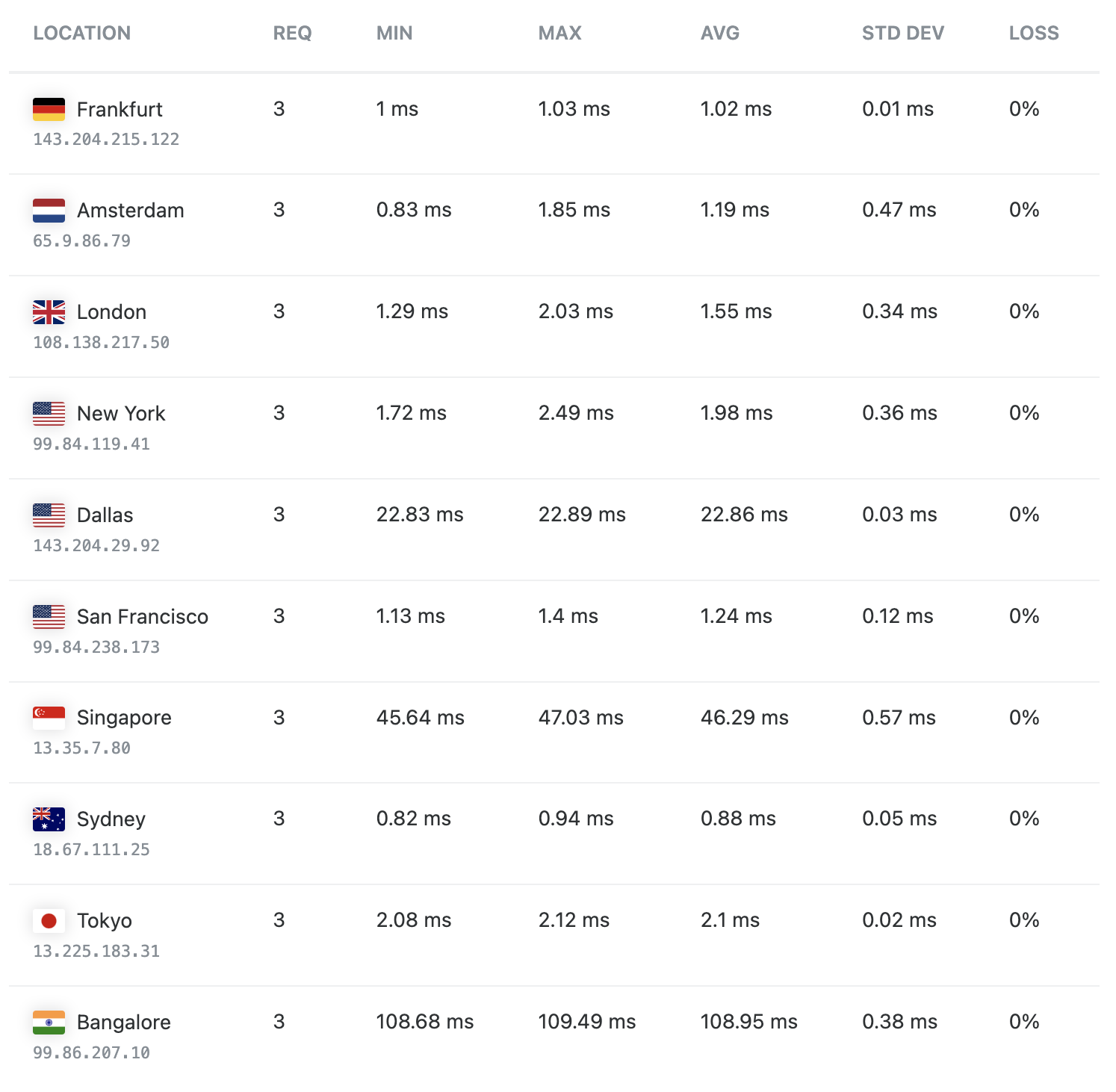

Your overall latency depends on your auth.example.com end point. But the whoami.example.com is blazing fast around the world:

blog

blog